American Energy Independence: The Great Shake-up

[Originally published in The Boston Globe Ideas section.]

EVER SINCE AMERICANS had to briefly ration gas in 1973, “energy independence” has been one of the long-range goals of US policy. Presidents since Richard Nixon have promised that America would someday wean itself of its reliance on foreign oil and gas, which leaves us vulnerable to the outside world in a way that was seen as a gaping hole in America’s national security. It also handcuffs our foreign policy, entangling America in unstable petroleum-producing regions like the Middle East and West Africa.

Given the United States’ huge appetite for fuel, energy independence has always seemed more of a dream than a realistic prospect. But today, nearly four decades later, energy independence is starting to loom in sight. Sustained high oil prices have made it economically viable to exploit harder-to-reach deposits. Techniques pioneered over the last decade, with US government support, have made it possible to extract shale oil more efficiently. It helps, too, that Americans have kept reducing their petrochemical consumption, a trend driven as much by high prices as by official policy. Total oil consumption peaked at 20.7 million barrels per day in 2004. By 2010, the most recent year tracked in the CIA Factbook, consumption had fallen by nearly a tenth.

Last year, the United States imported only 40 percent of the oil it consumed, down from 60 percent in 2005. And by next year, according to the US Energy Information Administration, the United States will need to import only 30 percent of its oil. That’s been driven by an almost overnight jump in domestic oil production, which had remained static at about 5 million barrels per day for years, but is at 7 million now and will be at 8.5 million by the end of 2014. If these trends continue, the United States will be able to supply all its own energy needs by 2030 and be able to export oil by 2035. In fact, according to the government’s latest projections, the country is on track to become the world’s largest oil producer in less than a decade.

Yet as this once unimaginable prospect becomes a realistic possibility, it’s far from clear that it will solve all the problems it was supposed to. As much as boosters hope otherwise, energy independence isn’t likely to free America from its foreign policy entanglements. And at worst, say some skeptics who specialize in energy markets, it might create a whole new host of them, subjecting America to the same economic buffeting that plagues most oil exporters, and handing China even more global influence as the world’s behemoth consumer.

As much as the shift brings opportunities, however, it is also likely to open the United States up to liabilities we have not yet had to face. The consequences may be both good and bad, enriching and destabilizing for US interests—but they will certainly have a major impact on our geopolitics, in ways that the policy world is only just beginning to understand.

***

WHEN RICHARD NIXON was president, America consumed about one-third of the world’s oil, importing about 8.4 million barrels per day chiefly from the Middle East. The status quo hummed along until the Arab-Israeli war of 1973. The United States sent weapons to Israel, and the Arab states retaliated with a six-month oil embargo, refusing to sell oil to America. It was the only time in history that the “oil weapon” was effectively used, and it made a permanent impression on the United States.

Over time, the American response to the embargo came to include three major initiatives that still shape energy policy today. First, the government promoted lower oil consumption by pushing coal and natural gas power plants, home insulation, and mileage standards for cars. Second, the country drilled for more of its own oil. Third, and perhaps most important from a foreign-policy standpoint, the United States promoted a unified global oil market in which any country had the practical means to buy oil from any other. That meant that even if some countries couldn’t do business with each other—say, Iran and the United States—it wouldn’t affect the overall price and availability of oil. Other countries could fill in the gap.

The dreams of energy independence crossed party lines. Though liberals and conservatives differ on the means—how much we should rely on new drilling versus energy conservation—both parties have endorsed the quest. It was one of the few issues on which Presidents Carter and Reagan agreed.

America has made steady progress over the years, to the point where the nation’s total oil consumption has actually begun to drop. As this has happened, the high cost of global energy has also made it profitable to increase domestic production of natural gas and oil. A few months ago, both the US Energy Information Administration and the International Energy Agency predicted that if current production trends continue, the United States will overtake Saudi Arabia and Russia as the world’s largest oil producer in 2017.

Taken together, our slowing appetite and booming production mean that with a suddenness that has surprised many observers, the prospect of energy independence—technically speaking, at least—looms in the windshield.

Energy independence looks different today, however, than it did in the oil-shocked 1970s. For one thing, the energy market is a linchpin of the world order, and any big shift is likely to have costs to stability. Some analysts have warned that America’s growing oil production will create a glut that lowers prices, eating up the profits of oil countries and destabilizing their regimes. (That’s in the short term, anyway; worldwide, oil demand is still rising fast.) Falling prices mean that countries that depend on oil will face sudden cash shortages. It’s easy to imagine how destabilizing that could be for a natural-resource power like Russia, for the monarchs of the Persian Gulf, or for the dictators in Central Asia. No matter how distasteful their rule, the prospect of an unruly transition, or worse still, a protracted conflict, in any of those countries could cause havoc.

In the long term, this is not necessarily a bad thing: Weakening oppressive or corrupt governments could ultimately be beneficial for the people of those countries. And a shift in the balance of power away from the Gulf monarchies of OPEC and toward the United States could have a democratizing effect. In any event, though, lower oil prices and a dynamic energy market make the current stable order unpredictable.

China’s economic rise has also changed the global energy equation. For now, China is largely without its own petroleum supplies and is replacing the United States as the largest importer. As China steps into the United States’ shoes as the world’s largest oil customer, it will gain influence in oil-producing regions as American influence wanes. It might also feel compelled to invest more heavily in an aggressive navy, fearing that the United States will no longer shoulder the responsibility of policing shipping lanes in the Persian Gulf and elsewhere—a costly security service that America pays for but which benefits the entire network of global trade.

Domestically, there’s also the “resource curse,” which afflicts countries that depend too heavily on extracted commodities like minerals or petroleum. Such industries don’t add much value to a society beyond the price the commodity fetches at market, and that price is notoriously fickle, meaning fortunes and jobs rise and fall with swings in global prices. The resource curse often implies corruption and autocracy as well. But economists are less concerned about that, since the United States already has an effective government and laws to thwart corruption, and because oil will still make up a minuscule overall share of the economy. Last year oil and gas extraction amounted to just 1.2 percent of the American gross domestic product.

***

THERE ARE STILL plenty of people who think that energy self-sufficiency will be an unalloyed good. Jay Hakes, who has pursued the goal as an energy official under the last three Democratic presidents, says that America will reap countless political and economic dividends. It will help the trade deficit, give American companies and workers benefits when oil prices are high, and insulate the country from supply shocks. It will also give Washington wider latitude when dealing with oil-producing countries, on which it will depend less. “There are some downsides,” he acknowledges, “but they’re outweighed by all the positives.”

One benefit that self-sufficiency won’t bring, it seems clear, is a sudden independence from the politics of the Middle East. The region produces about half the world’s oil, and Saudi Arabia alone has so much oil that it can raise its capacity at a moment’s notice to make up for a shortfall anywhere else in the world.

Already, America is largely independent of Middle Eastern oil as a consumer: Only about 15 percent of our supply comes from the region. But we do depend on a stable world market—even more so if we become a net exporter ourselves. So even if we don’t buy Saudi oil, we’ll still need a stable Saudi regime that can add a few million barrels a day to world flows, at a moment’s notice, to offset a disruption somewhere else.

Michael Levi, a fellow at the Council on Foreign Relations and author of the book “The Power Surge: Energy, Opportunity, and the Battle for America’s Future,” believes that the biggest risk of achieving a goal like energy independence is complacency: Without the pressures that importing oil has brought, we may have little reason to innovate our way out of fossil fuels altogether. The policies themselves have achieved a great deal of good, he points out—stabilizing the world’s energy markets, reducing consumption, and pushing us beyond “independence” toward renewable sources like wind and solar power (though today these still make up a vanishingly small portion of the US energy supply).

Levi argues that an American oil bonanza could easily remove the political incentives for long-term planning and sacrifice. “I get scared that we’ll become complacent and make foolish decisions because we believe we’ve become energy independent,” Levi says. Energy independence was a useful slogan to motivate America, but in reality, a sensible energy policy has to balance a plethora of competing concerns, from geopolitics and the environment to consumer demand and fuel’s importance to the economy.

“The real way to be energy independent,” he said, “is actually to not use oil.”

How cities reshape themselves when trust vanishes

Blast barriers in Baghdad, 2008. DONOVAN WYLIE / MAGNUM PHOTOS

[Originally published in The Boston Globe Ideas.]

BEIRUT — Everything that people love and hate about cities stems from the agglomeration of humanity packed into a tight space: the traffic, the culture, the chance encounters, the anxious bustle. Along with this proximity come certain feelings, a relative sense of security or of fear.

Over the last 13 years I have lived in a magical succession of cities: Boston, Baghdad, New York, and Beirut. They all made lovely and surprising homes—and they all were distorted to varying degrees by fear and threat.

At root, cities depend on constant leaps of faith. You cross paths every hour with people of vastly different backgrounds and trust that you’ll emerge safely. Each act of violence—a mugging, a murder, a bombing—erodes that faith. Over time, especially if there are more attacks, a city will adapt in subtle but profound and insidious ways.

The bombing last week was a shock to Boston, and a violation. As long as it’s isolated, the city will recover. But three people have died, and more than 170 have been wounded, and the scar will remain. As we think about how to respond, it’s worth also considering what happens when cities become driven by fear.

NEW YORK, WASHINGTON, and to a lesser extent the rest of America have exchanged some of the trappings of an open society for extra security since the Sept. 11 attacks. Metal detectors and guards have become fixtures at government buildings and airports, and it’s not unusual to see a SWAT team with machine guns patrolling in Manhattan. Police conduct random searches in subways, and new buildings feature barriers and setbacks that isolate them from the city’s pedestrian life.

Baghdad and Beirut, however, are reminders of the far greater changes wrought by wars and ubiquitous random violence. A city of about 7 million, low-slung Baghdad sprawls along the banks of the Tigris River. It’s the kind of place where almost everyone has a car, and it blends into expansive suburbs on its fringes. When I first arrived in 2003, the city was reeling from the shock-and-awe bombing and the US invasion. But it was the year that followed that slowly and inexorably transformed the way people lived. First, the US military closed roads and erected checkpoints. Then, militants started ambushing American troops and planting roadside bombs; the ensuing shootouts often engulfed passersby. Finally, extremists and sectarian militias began indiscriminately targeting Iraqi civilians and government personnel—in queues at public buildings, at markets, in mosques, virtually everywhere. Order crumbled.

Baghdad became a city of walls. Wealthy homeowners blocked their own streets with piles of gravel. Jersey barriers sprang up around every ministry, office, and hotel. As the conflict widened, entire neighborhoods were sealed off. People adjusted their commutes to avoid tedious checkpoints and areas with frequent car bombings. Drive times doubled or tripled. Long lines, with invasive searches, became an everyday fact of life. The geography of the city changed. Markets moved, even the old-fashioned outdoor kind where merchants sell livestock or vegetables. Entire sub-cities sprang up to serve Shia and Sunni Baghdadis who no longer could travel through each other’s areas.

Simple civic pleasures atrophied almost overnight. No one wanted to get blown up because they insisted on going out for ice cream. The famed riverfront restaurants went dormant; no more live carp hammered with a mallet and grilled before our eyes. The water-pipe joints in the parks went out of business. Most of the social spaces that defined the city shut down. Booksellers fled Mutanabe Street, the intellectual center of the city with its antique cafes. The amusement park at the Martyrs Monument shut its gates. Hotel bar pianists emigrated. Dust settled over the playgrounds and fountains at the strip of grassy family restaurants near Baghdad University.

In Beirut, where I moved with my family earlier this year, a generation-long conflict has Balkanized the city’s population. Here, most groups no longer trust each other at all. From 1975 to 1991 the city was split by civil war, and people moved where they felt safest. A cosmopolitan city fragmented into enclaves. Christians flocked to East Beirut, spawning a dozen new commercial hubs. Shiites squatted in the village orchards south of Beirut, and within a decade had built a city-within-a-city almost a million strong—the Dahieh, or “the Suburb.” The original downtown became a demilitarized zone, its Arabesque arcades reduced to rubble, and today has been rebuilt as a sterile, Disney-like tourist and office sector. Ras Beirut, my neighborhood, deteriorated from proudly diverse (and secular) to “Sunni West Beirut,” although it still boasts pockets of stubborn coexistence. In today’s Beirut, my block, where a Druze warlord lives across the street from a church and subsidizes the parking fees of his Shia, Sunni, and Christian neighbors, is a stark exception.

Mixing takes place, but tentatively, and because of frequent outbreaks of violence over the years, Beirutis have internalized the reflexes to fight, defend, and isolate. The result is a city alight with street life, cafes, and boutiques but which can instantaneously shift to war footing. One friend ran into his bartender on a night off at a checkpoint with bandoliers of bullets strapped to his chest. Even when the city appears calm, most people have laid in supplies in case an armed flare-up forces them to stay in their homes for a week. My friend’s teenage daughter keeps a change of clothes in her schoolbag in case she can’t return to her house. Uniformed private guards are everywhere, in every park, on every promenade, at every mall.

***

WITHIN A FEW weeks of our move to Beirut this year, my 5-year-old son traded his old fantasy, in which he played the doctor and assigned us roles as patients and nurses, for a new one: security guard. While we were setting up for a yard party, he arranged a few plastic chairs by the door. “I’ll check people here,” he declared. He also asked me a lot of questions about the heavily armed soldiers who stand watch on our street: “Will the army shoot me if I make a mistake?”

This is not the childhood he would have in Boston, even after this week. War-molded cities are nightmare reflections of failed states, places where government has gone into free fall, police don’t or can’t do their jobs, and normal life feels out of reach. Beirut is a kind of warning: Physically it appears normal on most days. But trust is gone. The public sphere feels wobbly and impermanent.

Boston is still lucky, with assets that Beirut lost generations ago; it has functional institutions, old communities with tangled but shared histories, and unifying cultural traditions. Boston has police that can get a job done and a baseball team that ties together otherwise divided corners of the city. One lesson of the city I live in now is that circumstances can sever these lifelines faster than we expect. Our connections require continued, perhaps redoubled, care. Without trust, a city can still be a magnificent place to live. Until all at once, it isn’t.

Should America Let Syria Fight On?

Syrian rebel fighters posed for a photo after several days of intense clashes with the Syrian army in Aleppo, Syria, in October. (AP: NARCISO CONTRERAS)

[Originally published in The Boston Globe Ideas.]

THE NEWS FROM SYRIA keeps getting worse. As it enters its third year, the civil war between the ruthless Assad regime and groups of mostly Sunni rebels has taken nearly 100,000 lives and settled into a violent, deadly stalemate. Beyond the humanitarian costs, it threatens to engulf the entire region: Syria’s rival militias have set up camp beyond the nation’s borders, destabilizing Turkey, Lebanon, and Jordan. Refugees have made frontier areas of those countries ungovernable.

United Nations peace talks have never really gotten off the ground, and as the conflict gets worse, voices in Europe and America, from both the left and right, have begun to press urgently for some kind of intervention. So far the Obama administration has largely stayed out, trying to identify moderate rebels to back, and officially hoping for a negotiated settlement—a peace deal between Assad’s regime and its collection of enemies.

Given the importance of what’s happening in Syria, it might seem puzzling that the United States is still so much on the sidelines, waiting for a resolution that seems more and more elusive with each passing week. But it is also becoming clear that for America, there’s another way to look at what’s happening. A handful of voices in the Western foreign policy world are quietly starting to acknowledge that a long, drawn-out conflict in Syria doesn’t threaten American interests; to put it coldly, it might even serve them. Assad might be a monster and a despot, they point out, but there is a good chance that whoever replaces him will be worse for the United States. And as long as the war continues, it has some clear benefits for America: It distracts Iran, Hezbollah, and Assad’s government, traditional American antagonists in the region. In the most purely pragmatic policy calculus, they point out, the best solution to Syria’s problems, as far as US interests go, might be no solution at all.

If it’s true that the Syrian war serves American interests, that unsettling insight leads to an even more unsettling question: what to do with that knowledge. No matter how the rest of the world sees the United States, Americans like to think of themselves as moral actors, not the kind of nation that would stand by as another country destroys itself through civil war. Yet as time goes on, it’s starting to look—especially to outsiders—as if America is enabling a massacre that it could do considerably more to end.

For now, the public debate over intervention in America has a whiff of hand-wringing theatricality. We could intervene to staunch the suffering but for circumstances beyond our control: the financial crisis, worries about Assad’s successor, the lingering consequences of the Iraq war. These might explain why America doesn’t stage a costly outright invasion. But they don’t explain why it isn’t sending vastly more assistance to the rebels.

The more Machiavellian analysis of Syria’s war helps clarify the disturbing set of choices before us. It’s unlikely that America would alter the balance in Syria unless the situation worsens and protracted civil war begins to threaten, rather than quietly advance, core US interests. And if we don’t want to wait for things to get that bad, then it is time for America’s policy leaders to start talking more concretely—and more honestly—about when humanitarian concerns should trump our more naked state interests.

****

MANY AMERICAN observers were heartened when the Arab uprisings spread to Syria in the spring of 2011, starting with peaceful demonstrations against Bashar al-Assad’s police state. Given Assad’s long and murderous reign, a democratic revolution seemed to offer hope. But the regime immediately responded with maximum lethality, arresting protesters and torturing some to death.

Armed rebel groups began to surface around the country, harassing Assad’s military and claiming control over a belt of provincial cities. Assad has pursued a scorched earth strategy, raining shells, missiles, and bombs on any neighborhood that rises up. Rebel areas have suffered for the better part of a year under constant strafing and sniper fire, without access to water, health care, or electricity. Iran and Russia have kept the military pipeline open, and Assad has a major storehouse of chemical weapons. While some rebel groups have been accused of crimes, the regime is disproportionately responsible for the killing, which earlier this year passed the 70,000 mark by a United Nations estimate that close observers consider an undercount.

As the civil war has hardened into a bloody, damaging standoff, many have called for a military intervention, pressing for the United States to side with one of the moderate rebel factions and do whatever it takes to propel it to victory. Liberal humanitarians focus on the dead and the millions driven from their homes by the fighting, and have urged the United States to join the rebel campaign. The right wants intervention on different grounds, arguing that the regional security implications of a failed Syria are too dangerous to ignore; the country occupies a significant strategic location, and the strongest rebel coalition, the Nusra Front, is an Al Qaeda affiliate. Given all those concerns, both sides suggest that it’s only a question of when, not if, the United States gets drawn in.

“Syria’s current trajectory is toward total state failure and a humanitarian catastrophe that will overwhelm at least two of its neighbors, to say nothing of 22 million Syrians,” said Fred Hof, an ambassador who ran Obama’s Syria policy at the State Department until last year, when he quit the administration and became a leading advocate for intervention. His feelings are widely shared in the foreign policy establishment: Liberals like Princeton’s Anne-Marie Slaughter and conservatives like Fouad Ajami have made the interventionist case, as have State Department officials behind the scenes.

Intervention is always risky, and in Syria it’s riskier than elsewhere. The regime has a powerful military at its disposal and major foreign backers in Russia and Iran. An intervention could dramatically escalate the loss of life and inflame a proxy struggle into a regional conflagration.

And yet there’s a flip side to the risks: The war is also becoming a sinkhole for America’s enemies. Iran and Hezbollah, the region’s most persistent irritants to the United States and Israel, have tied up considerable resources and manpower propping up Assad’s regime and establishing new militias. Russia remains a key guarantor of the government, costing Russia support throughout the rest of the Arab world. Gulf monarchies, which tend to be troublesome American allies, have invested small fortunes on the rebel side, sending weapons and establishing exile political organizations. The more the Syrian war sucks up the attention and resources of its entire neighborhood, the greater America’s relative influence in the Middle East.

If that makes Syria an unattractive target for intervention, so too do the politics and position of the combatants. For now, jihadist groups have established themselves as the most effective rebel fighters—and their distaste for Washington approaches their rage against Assad. Egos have fractured the rebellion, with new leaders emerging and falling every week, leaving no unified government-in-waiting for outsiders to support. The violent regime, meanwhile, is no friend to the West.

“I’ll come out and say it,” wrote the American historian and polemicist Daniel Pipes, in an e-mail. “Western powers should guide the conflict to stalemate by helping whichever side is losing. The danger of evil forces lessens when they make war on each other.”

Pipes is a polarizing figure, best known for his broadsides against Islamists and his critique of US policy toward the Middle East, which he usually says is naive. But in this case he’s voicing a sentiment that several diplomats, policy makers, and foreign policy thinkers have expressed to me in private. Some are career diplomats who follow the Syrian war closely. None wants to see the carnage continue, but one said to me with resignation: “For now, the war is helping America, so there’s no incentive to change policy.”

Analysts who follow the conflict up close almost universally want more involvement because they are maddened by the human toll—but many of them see national interests clearly standing in the way. “Russia gets to feel like it’s standing up to America, and America watches its enemies suffer,” one complained. “They don’t care that the Syrian state is hollowing itself out in ways that will come back to haunt everyone.”

****

IS IT EVER ACCEPTABLE to encourage a war to continue? In the policy world it’s seen as the grittiest kind of realpolitik, a throwback to the imperial age when competing powers often encouraged distant wars to weaken rivals, or to keep colonized nations compliant. During the Cold War the United States fanned proxy wars from Vietnam to Afghanistan to Angola to Nicaragua but invoked the higher principle of stopping the spread of communism, rather than admitting it was simply trying to wear out the Soviet Union.

In Syria it’s impossible to pretend that the prolonging of the civil war is serving a higher goal, and nobody, even Pipes, wants the United States to occupy the position of abetting a human-rights catastrophe. But the tradeoffs illustrate why Syria has become such a murky problem to solve. Even in an intervention that is humanitarian rather than primarily self-interested, a country needs to weigh the costs and risks of trying to help against the benefit we might realistically expect to bring—and it’s a difficult decision to get involved when those potential costs include threats to our own political interests.

So just what would be bad enough to induce the United States to intervene? An especially egregious massacre—a present-day Srebenica or Rwanda—could fan such outrage that the White House changes its position. So too would a large-scale violation of the Chemical Weapons Convention—signed by most states in the world, but not Syria. But far more likely is that the war simmers on, ever deadlier, until one side scores a military victory big enough to convince the outside powers to pick a winner. The White House hopes that with time, rebels more to its liking will gain influence and perhaps eclipse the alarming jihadists. That could take years. Many observers fear that Assad will fall and open the way to a five- or ten-year civil war between his successor and a well-armed coalition of Islamist militias, turning Syria into an Afghanistan on the Euphrates. The only thing that seems likely is that whatever comes next will be tragic for the people of Syria.

Because this chilly if practical logic is largely unspoken, the current hands-off policy continues to bewilder many American onlookers. It would be easier to navigate the conversation about intervention if the White House, and the policy community, admit what observers are starting to describe as the benefits of the war. Only then can we move forward to the real moral and political calculations at stake: for example, whether giving Iran a black eye is worth having a hand in the tally of Syria’s dead and displaced.

For those up close, it’s looking unhappily like a trip to a bygone era. Walid Jumblatt, the Lebanese Druze warlord, spent much of the last two years trying fruitlessly to persuade Washington and Moscow to midwife a political solution. Now he’s given up. Atop the pile of books on his coffee table sits “The Great Game,” a tale of how superpowers coldly schemed for centuries over Central Asia, heedless of the consequences for the region’s citizens. When he looks at Syria he sees a new incarnation of the same contest, where Russia and America both seek what they want at the expense of Syrians caught in the conflict.

“It’s cynical,” he said in a recent interview. “Now we are headed for a long civil war.”

Egypt’s Free-Speech Backlash

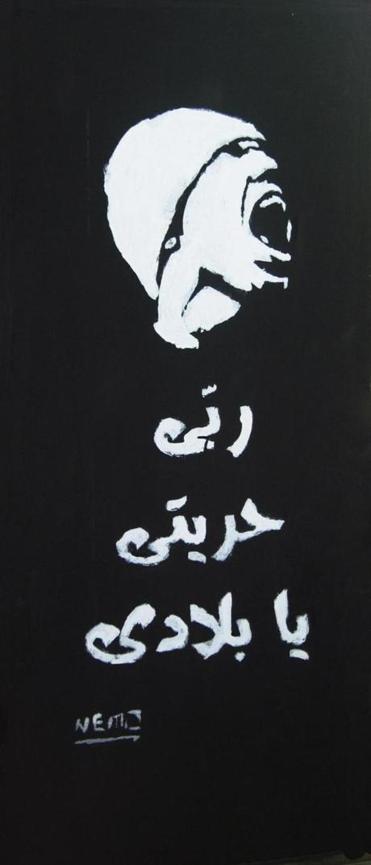

A street poster from Cairo that reads, “My God, my freedom, O my country.” Photo: NEMO.

[The Internationalist column in The Boston Globe Ideas.]

CAIRO — Every night, Egypt’s current comedic sensation, a doctor hailed as his country’s Jon Stewart, lambastes the nation’s president on TV, mocking his authoritarian dictates and airing montages that reveal apparent lies. On talk shows, opposition politicians hold forth for hours, excoriating government policy and new Islamist president Mohammed Morsi. Protesters use the earthiest of language to compare their political leaders to donkeys, clowns, and worse. Meanwhile, the president’s supporters in the Muslim Brotherhood respond in kind on their new satellite television station and in mass counter-rallies.

Before Egypt’s uprising two years ago, this kind of open debate about the president would have been unthinkable. For nearly three decades, former president Hosni Mubarak exerted near total control over the public sphere. In the twilight of his term, he imprisoned a famous newspaper editor who dared to publish speculation about the ailing president’s declining health. No one else touched the story again.

To Western observers, the freewheeling back-and-forth in Egypt right now might sound like the flowering of a young open society, one of the revolution’s few unalloyed triumphs. But amid the explosion of debate, something less wholesome has begun to arise as well. Though speech is far more open, it now carries a new and different kind of risk, one more unpredictable and sudden. Islamist officials and citizens have begun going after individuals for crimes such as blasphemy and insulting the president, and vaguer charges like sedition and serving foreign interests. The elected Islamist ruling party, the Muslim Brotherhood, pushed a new constitution through Egypt’s constituent assembly in December that expanded the number of possible free speech offenses—including insults to “all prophets.”

Worryingly, a recent report showed that President Morsi—a Brotherhood member, and Egypt’s first-ever genuinely elected, civilian leader—has invoked the law against insulting the presidency far more frequently than any of the dictators who preceded him, and has even directed a full-time prosecutor to summon journalists and others suspected of that crime.

The Muslim Brotherhood, as it rises to power, is playing host to conflicting ideas. It wants the United States to view it as a tolerant modern movement that doesn’t arbitrarily silence critics, but at the same time it needs to show its political base of socially conservative constituents in rural Egypt that it won’t tolerate irreligious speech at home. And it wants to argue that despite its religious pedigree, it is behaving within the constraints of the law.

For the time being, Egypt’s proliferating free expression still outstrips government efforts to shut it down. But as the new open society engenders pushback, what’s happening here is in many ways a test case for Islamist rule over a secular state. What’s at stake is whether Islamists—who are vying for elected power in countries around the Muslim world—really only respect the rules until they have enough clout to ignore them.

***

The text on this Cairo street poster reads, “As they breathe, they lie.” Photo: NEMO

EGYPTIANS ARE RENOWNED throughout the Arab world for jokes and wordplay, as likely to fall from the mouth of a sweet potato peddler as a society journalist. Much of daily life takes place in the crowded public social spaces where people shop, drink hand-pressed sugarcane juice, loiter with friends, or picnic with their families. But under the stifling police state built by Mubarak, that vitality was undercut by fear of the undercover police and informants who lurked everywhere, declaring themselves at sheesha joints or cafes when the conversation veered toward politics.

As a result, a prudent self-censorship ruled the day. State security officials had desks at all the major newspapers, but top editors usually saved them the trouble, restraining their own reporters in advance. In 2005, when one publisher took the bold step of publishing a judge’s letter critical of the regime, he confiscated the cellphones of all his editors and sequestered them in a conference room so they couldn’t tip off authorities before the paper reached the streets.

It wasn’t technically illegal to be a dissident in Egypt; that the paper could be published at all was testament to the fact that some tolerance existed. Egypt’s system was less draconian and violent than the police states in Syria and Iraq, where dissidents were routinely assassinated and tortured. But the limits of public speech were well understood, and Egyptians who cared to criticize the state carefully stayed on the accepted side of the line. Activists would speak out about electoral fraud by the ministry of the interior or against corruption by businesspeople, for example, but would carefully refrain from criticizing the military or Mubarak’s family. Political life as we understand it barely existed.

Egypt’s uprising marked an abrupt break in this long cultural balancing act. For the first time, millions of Egyptians expressed themselves freely and in public, openly defying the intelligence minions and the guns of the police. It was shocking when people in the streets called directly for the fall of the regime. Within weeks, previously unimaginable acts had become commonplace. Mubarak’s effigy hung in Tahrir Square. Military generals were mocked as corrupt, sadistic toadies in cartoons and banners. Establishment figures called for trials of former officials and limits on renegade security officials.

In the two years since, free speech has spread with dizzying speed—on buses, during marches, around grocery stalls, everywhere that people congregate. Today there are fewer sacred cows, although even at the peak of revolutionary fervor few Egyptians were willing to risk publicly impugning the military, which was imprisoning thousands without any due process. (An elected member of parliament faced charges when he compared the interim military dictator to a donkey.)

Mohammed Morsi was inaugurated in June, after a tight election that pitted him against a former Mubarak crony. Morsi campaigned on a promise to excise the old regime’s ways from the state, and on a grandiose Islamist platform called “The Renaissance.” His regime has fared poorly in its efforts to take control of the police and judiciary. Nor has it made much progress on its sweeping but impractical proposals to end poverty and save the Egyptian economy. It has proven easier to talk about Islamic social issues: allegations of blasphemy by Christians and atheist bloggers; alcohol consumption and the sexual norms of secular Egyptians; and the idea, widely held among Brotherhood supporters, that a godless cabal of old-regime supporters is secretly plotting to seize power.

Before it won the presidency, the Muslim Brotherhood emphasized it had been fairly elected; the party was Islamist, it said, but from the pragmatic, democratic end of the spectrum. But in recent months, there’s been more than a whiff of Big Brother about the Brotherhood. Supposed volunteers attacked demonstrators outside Morsi’s presidential palace—and then were videotaped turning over their victims to Brotherhood operatives. Allegations of torture, illegal detention, and murder by state agents pile up uninvestigated.

As revolutionaries and other critical Egyptians have turned their ire from the old regime to the new, the Brotherhood also has begun targeting political speech. The new constitution, authored by the Brotherhood and forced through Egypt’s constituent assembly in an overnight session over the objections of the secular opposition and even some mainstream religious clerics, criminalized blasphemy and expanded older statutes against insults to leaders, state institutions like the courts, and religious figures. Popular journalists have been threatened with arrest, while less famous individuals, including children improbably accused of desecrating a Koran, have been thrown into detention. Morsi’s presidential advisers regularly contact human rights activists and journalists to challenge their reports, a level of attention and pressure previously unknown here.

In addition to the old legal tools to limit free expression, which are now more heavily used by the Islamists than they were by Mubarak, the new constitution has added criminal penalties for insulting all religions and empowers courts to shut down media outlets that don’t “respect the sanctity of the private lives of citizens and the requirements of national security.”

The Egyptian government began an investigation of TV comedian Baseem Yousef but dropped its charges after a public outcry.

Egyptian human rights monitors have tracked dozens of such cases, including three that were filed by the president’s own legal team. Gamal Eid at the Arab Network for Human Rights Information charted 40 cases that prosecuted political critics for what amounted to dissenting speech in the first 200 days of Morsi’s regime. That’s more, he claims, than during Mubarak’s entire reign, and more charges of insulting the president than were filed since 1909, when the law was first written.

***

IT’S A WELL-KNOWN PRECEPT in politics that times of transition are the most unstable, and that the fight to establish civil liberties carries risks. The current speech crackdown may just be an expected symptom of the shift from an effective authoritarian state to competitive politics. Mubarak, of course, had less need to prosecute a population that mostly kept quiet.

It could also be a sign of desperation on the part of the Brotherhood, as it struggles to rule without buy-in from the police and state bureaucracy. Or it could, more alarmingly, mark a transition to a genuine new era of censorship in the most populous Arab country, this time driven as much by the Islamist cultural agenda as by the quest to keep a grip on power.

It is that last prospect that makes the path Egypt takes so important. By dint of its size and cultural heft, the country remains a major influence across the Arab world, and both in Egypt and elsewhere, the Muslim Brotherhood is at the front lines of political Islam—trying to balance the cultural conservatism of its rank-and-file supporters with the openness the world expects from democratic society.

There are signs that the Brotherhood wants to at least make gestures toward Western norms, though it remains hard to gauge exactly how open an Egypt its members would like to see. At one point the government began an investigation of Baseem Yousef, the Jon Stewart-like TV comedian, but abruptly dropped its charges in January after a public outcry.

During the wave of bad publicity around the investigation, one of President Morsi’s advisers issued a statement claiming that the state would never interfere in free speech—so long as citizens and the press worked to raise their “level of credibility.”

“Human dignity has been a core demand of the revolution and should not be undermined under the guise of ‘free speech,’” presidential adviser Esam El-Haddad said in a statement that placed ominous boundaries on the very idea of free speech that it purported to advance. “Rather, with freedom of speech comes responsibility to fellow citizens.”

What scares many people, is how they define “responsibility.” A widely watched video clip portrays a Salafi cleric lecturing his followers about how Egypt’s new constitution will allow pious Muslims to limit Christian freedoms and silence secular critics (the cleric, Sheikh Yasser Borhami, is from a more fundamentalist current, separate from but allied with the Brotherhood). When critics look at the Brotherhood’s current spate of investigations and threatened prosecutions, they see the political manifestation of the same exclusionary impulse: the polarizing notion that the Islamists’ actions are blessed by God and, by implication, that to criticize them is sacrilege.

Modern Islamism hasn’t reckoned with this implicit conflict yet, even internally. Officially, one current of the Brotherhood’s ideology prioritizes social activism over politics, and eschews coercion in religious matters. But another, perhaps more popular strain in Brotherhood thinking agitates for a religious revolution in people’s daily lives, and that strain appears to be driving the behavior of the Brothers suddenly in charge of the nation. Their fervor is colliding squarely with the secular responsibility of running a state like Egypt, which for all its shortcomings has real institutions, laws, and a civil society that expects modern freedoms and protections. The first stage of Egypt’s transition from military dictatorship has ended, but the great clash between religious and secular politics is just beginning to unfold.

What really drives civil wars?

Christia Fotini with a Syrian girl in a camp for Internally Displaced Persons (IDPs) in Syria in the village of Atmeh.

[Originally published in The Boston Globe.]

WHAT IS a civil war, really?

At one level the answer is obvious: an internal fight for control of a nation. But in the bloody conflicts that split modern states, our policy makers often understand something deeper to be at work. The vengeful slaughter that has ripped apart Bosnia, Rwanda, Syria, and Yemen is most often seen as the armed eruption of ancient and complex hatreds. Afghanistan is embroiled in a nearly impenetrable melee between Pashtuns and smaller ethnic groups, according to this thinking; Iraq is split by a long-suppressed Sunni-Shia feud. The coalitions fighting these wars are seen as motivated by the deepest sort of identity politics, ideologies concerned with group survival and the essence of who we are.

This view has long shaped America’s engagement with countries enmeshed in civil war. It is also wrong, argues Fotini Christia, an up-and-coming political scientist at MIT.

In a new book, “Alliance Formation in Civil Wars,” Christia marshals in-depth studies of the recent wars in Afghanistan, Iraq, and Bosnia, along with empirical data from 53 civil conflicts, to show that in one civil war after another, the factions behave less like enraged siblings and more like clinically rational actors, switching sides and making deals in pursuit of power. They might use compelling stories about religion or ethnicity to justify their decisions, but their real motives aren’t all that different from armies squaring off in any other kind of conflict.

How we understand civil wars matters. Most civil wars drag on until they’re resolved by a foreign power, which in this era almost always includes the United States. If she’s right, if we’re mistaken about what motivates the groups fighting in these internecine free-for-alls, we’re likely to misjudge our inevitable interventions—waiting too long, or guessing wrong about what to do.

***

CIVIL WARS ALWAYS have loomed large in the collective consciousness. Americans still debate theirs so vociferously that a blockbuster film about Abraham Lincoln feels topical 150 years after his death. Eastern Europe saw several years of ferocious killing in the round of civil wars that followed World War II.

Such wars have been understood as fights over differences that can’t be resolved any other way: fundamental questions of ideology, identity, creed. A disputed border can be redrawn; not so an ethnic grudge. In the last two decades, identity has become the preferred explanation for persistent conflicts around the world, from Chechnya to Armenia and Azerbaijan to cleavages between Muslims and Christians in Nigeria.

This thinking allows for a simple understanding, and conveniently limits the prospect for a solution. Any identity-based cleavage—Jew vs. Muslim, Bosnian vs. Serb, Catholic vs. Orthodox—is so profoundly personal as to be immutable. The conventional wisdom is best exemplified by a seminal 1996 paper by political scientist Chaim Kaufmann, “Possible and Impossible Solutions to Ethnic Civil Wars,” which argues that bitterly opposed populations will only stop fighting when separated from each other, preferably by a major natural barrier like a river or mountain range.

During the 1990s, this sort of ethnic determinism drove American policy toward Bosnia and Rwanda. It was popularized by Robert Kaplan’s book “Balkan Ghosts,” which was read in the Clinton White House and presented the wars in the former Yugoslavia as just the latest chapter in an insoluble, four-century ethnic feud. Like Kaufmann, Kaplan suggested that the grievances in civil wars could only be managed, never reconciled.

After 9/11, policy makers in Washington continued to view civil wars through this prism, talking about tribes and sects and ethnic groups rather than minority rights, systems of government, and resource-sharing. That view was so dominant that President Bush’s team insisted on designing Iraq’s first post-Saddam governing council with seats designated by sect and ethnicity, against the advice of Iraqis and foreign experts. It became a self-fulfilling prophecy as Iraq’s ethnic civil war peaked in 2006; things settled down only after death squads had cleansed most of Iraq’s mixed neighborhoods, turning the country into a patchwork of ethnically homogenous enclaves. Similarly, this thinking has shaped US policy in Afghanistan, where the military even sent anthropologists to help its troops understand the local culture that was considered the driving factor in the conflict.

Christia grew in up in the northern Greek city of Salonica in the 1990s, with the Bosnian war raging just over the border. “It was in our neighborhood and we discussed it vividly every night over dinner,” she says. The question of ethnicity seized her imagination: Were different peoples doomed to conflict by incompatible identities? Or were the decision-makers in civil wars working on a different calculus from their emotional followers? As a graduate student at Harvard, Christia flew to Afghanistan and tried to turn a dispassionate political scientist’s eye to the question of why warlords behave the way they do.

Christia spent years studying these warlords, the factional leaders in a civil war that broke out in the late 1970s. As a graduate student and later as a professor, she returned to Afghanistan to interview some of the nastiest war criminals in the country. She concluded that culture and identity, while important for their adherents, did not seem to factor into the motives of the warlords themselves, and specifically not in their choices of wartime allies. Despite the powerful rhetoric about ethnic alliances forged in blood, warlords repeatedly flipped and switched sides. They used the same language—about tribe, religion, or ethnicity—whether they were fighting yesterday’s foe or joining him.

If ethnicity, religion, and other markers of identity didn’t matter to warlords, Christia asked, what did? It turns out the answer was simple: power. After studying the cases of Afghanistan, Bosnia, and Iraq in intricate detail, Christia built a database of 53 conflicts to test whether her theory applied more widely. She ran regression analyses and showed that it did: Warlords adjusted their loyalties opportunistically, always angling for the best slice of the future government. It’s not quite as simple as siding with the presumed winner, she says: It’s picking the weakest likely winner, and therefore the one most likely to share power with an ally.

In this model of warlord behavior, the many factions in a civil war are less like Cain and Abel and more like the mafia families in “The Godfather” trilogy. Loyalties follow business interests, and business interests change; meanwhile, the talk about family and blood keeps the foot soldiers motivated. In Bosnia, one Muslim warlord joined forces with the Serbs after the Serbs’ horrific massacre of Muslims at Srebenica, and justified his switch by saying that the central government in Sarajevo was run by fanatics while he represented the true, moderate Islam. In case after case of intractable civil wars—Afghanistan, Lebanon, Iraq, the former Yugoslavia—Christia found similar patterns of fluid alliances.

“The elites make the decision, and then sell it to the people who follow them with whatever narrative sticks,” Christia said. “We’re both Christians? Or we’re both minorities? Or we’re both anti-communist? Whatever sticks.”

***

CHRISTIA’S WORK has been received with great interest, though not all her academic colleagues agree with her conclusions. Critics say identity is more important in civil wars than she gives it credit for, and we ignore it at our peril. Roger Petersen, an expert on ethnic war and Eastern Europe who is a colleague of Christia’s at MIT and supervised her dissertation, argues that in some conflicts, identity—ethnic, religious, or ideological—is truly the most important factor. Leaders might make a pact with the devil to survive, but once a conflict heads to its conclusion, irreconcilable conflicts often end with a fight to the death. Communists and nationalists fought for total victory in Eastern Europe’s civil wars, with no regard to their fleeting coalitions of opportunity against foreign occupiers during World War II. More recently, Bosnia’s war only ended after the country had split into ethnically cleansed cantons.

Christia acknowledges that her theory needs further testing to see if it applies in every case. She is currently studying how identity politics play out at most local level in present-day Syria and Yemen.

If it holds up, though, Christia’s research has direct bearing on how we ought to view the conflict today in a nation like Syria. The teetering dictatorship is the stronghold of the minority Allawite sect in a Sunni-majority nation. And leader Bashar Assad has rallied his constituents on sectarian grounds, saying his regime offers the only protection for Syria’s minorities against an increasingly Sunni uprising. But Syria’s rebellion comprises dozens of armed factions, and Christia suggests that these militants, which run the gamut of ethnic and sectarian communities, will be swayed more by the prospect of power in a post-Assad Syria than by ethnic loyalty. That would mean the United States could win the loyalty of different fighting factions by ignoring who they are—Sunni, Kurd, secular, Armenian, Allawite—and by focusing instead on their willingness to side with America or international forces in exchange for guns, money, or promises of future political power.

For America, civil wars elsewhere in the world might seem like somebody else’s problem. But in reality we’re very likely to end up playing a role: Most civil wars don’t end without foreign intervention, and America is the lone global superpower, with huge sway at the United Nations. Christia suggests that Washington would do well to acknowledge early on that it will end up intervening in some form in any civil war that threatens a strategic interest. That doesn’t necessarily mean boots on the ground, but it means active funding of factions and shaping of the alliances that are doing the fighting. In a war like Syria’s, that means the United States has wasted precious time on the sidelines.

Despite her sustained look at the worst of human conflict, Christia says she considers herself an optimist: People spend most of their history peacefully coexisting with different groups, and only a tiny portion of the time fighting. And once civil wars do break out, the empirical evidence shows that hatreds aren’t eternal. “If identities mattered so much,” she says, “you wouldn’t see so much shifting around.”

What failed negotiations teach us

[Originally published in The Boston Globe Ideas.]

ROCKETS AND MORTARS have stopped flying over the border between Gaza and Israel, a temporary lull in one of the most intractable, hot-and-cold wars of our time. The hostilities of late November ended after negotiators for Hamas and Israel—who refused to talk face-to-face, preferring to send messages via Egyptian diplomats—agreed to a rudimentary cease-fire. Their tenuous accord has no enforcement mechanism and doesn’t even nod to discussing the festering problems that underlie the most recent crisis. Both sides say they expect another conflict; experience suggests it’s just a question of when.

Generations of negotiators have cut their teeth trying to forge a peace agreement between Israel and the Palestinians, and their failures are as varied as they are numerous: Camp David, Madrid, the Oslo Accords, Wye River, Taba, the Road Map. For diplomats and deal-makers around the world—even those with no particular stake in Middle East peace—Israel and Palestine have become the ultimate test of international negotiations.

For Guy Olivier Faure, a French sociologist who has dedicated his career to figuring out how to solve intractable international problems, they’re something else as well: an almost unparalleled trove of insights into how negotiations can go wrong.

For more than 20 years, Faure has studied not only what makes negotiations around the world succeed, but how they break down. From Israel and the Palestinians to the Biological Weapons Convention protocol to the ongoing talks about Iran’s nuclear program, it’s far more common for negotiations to fail than to work out. And it’s from these failures, Faure says, that we can harvest a more pragmatic idea of what we should be doing instead. “In order to not endlessly repeat the same mistakes, it is essential to understand their causes,” he says.

***

TODAY’S INTERNATIONAL order turns on successful negotiation. When we think about what’s right in the world, we’re often thinking about the results of agreements like the START treaties, which ended the nuclear arms race between the United States and the Soviet Union; the Geneva Conventions, which govern the conduct of war; or even the General Agreement on Tariffs and Trade, drafted in 1948, which still underpins globalized free trade.

But in negotiations over the most vexing international problems—a hostage situation, a war between a central government and terrorist insurgents, a new multinational agreement—such successes are few and far between. Failure is the norm. Understandably, experts tend to focus on the wins. From US presidents to obscure third-party diplomats, negotiators pore over rare historical successes for tips rather than face the copious and dreary overall record.

Faure wants to change that focus. As an expert he straddles two worlds: He studies diplomacy academically as a sociologist at the Sorbonne, in Paris, and has also trained actual negotiators for decades, at the European Union, the World Trade Organization, and UN agencies. Over his career, he has produced 15 books spanning all the different theories behind negotiation, and ultimately concluded that negotiations that failed, or simply sputtered out inconclusively, were the most interesting. Each failure had multiple causes, but it was possible to compile a comprehensive list, and from that, consistent patterns.

“Unfinished Business” takes a look at what happened during a number of high-profile failures, and examines the underlying conditions of each set of talks: trust, cultural differences, psychology, the role of intermediaries, and outsiders who can derail negotiations or overload them with extraneous demands.

One of Faure’s insights concerns the mindset of negotiators—a factor negotiators themselves often believe is irrelevant, but which Faure and his colleagues believe can often determine the outcome. Incompatible values on the two sides of the table, he says, are much harder to bridge than practical differences, like an argument over a boundary or the mechanics of a cease-fire. As Faure says, “A quantity can be split, but not a value.” This is what Faure saw at work when the Palestinians and Israelis embarked on a rushed negotiation at the Egyptian seaside resort of Taba during Bill Clinton’s final month in office. The two sides had already reached an impasse at a lengthier negotiation in 2000 at Camp David. With the end of his presidency looming and Israeli elections coming up, Clinton summoned them back to the table for a no-nonsense session he hoped would bring speedy closure to disputes over borders, Jerusalem, and refugees. The Palestinians, however, felt that the two sides simply didn’t share the same view of justice and weren’t truly aiming at the same goal of two sovereign states—and so didn’t feel driven to make a deal. That mismatch of long-term beliefs, Faure says, doomed the talks.

There are other warning signs that emerge as patterns in failed talks. Time and again, parties embark on tough negotiations already convinced they will fail—a defeatism that becomes a self-fulfilling prophesy. In interviewing professional negotiators, Faure and his colleagues found that they often don’t pay that much attention to the practical aspects of how to run a negotiation—a surprising lapse.

Faure and his team have found that a well-planned process is one of the best predictors of success, and that many negotiations are terrible at it. When the European Union and the United States talked to Iran about its nuclear program, various European countries kept adding extraneous issues to the talks, for instance linking Iran’s behavior with nukes to existing trade agreements. The additions made the negotiations unwieldy, and provoked crises over matters peripheral to the actual subject. In the case of the mediation over Cyprus, the Greek and Turkish sides didn’t bother coming up with any tangible proposed solution to negotiate over, instead talking vaguely about a Swiss model. Negotiations failed in part because neither side knew what that would mean for Cyprus.

Ultimately, Faure argues, mistrust and inflexibility tangle up negotiators more than any other factor. Negotiators often end up demonizing the other side, and as a result might embark on a process that by its structure encourages failure. For instance, Israeli and Palestinian reliance on mediators to ferry messages—even between delegations in the same resort—maximizes misunderstandings and minimizes the possibility that either side will sense a genuine opening.

***

WHAT EMERGES FROM Faure’s work, overall, is that the outlook for negotiations is usually pretty bleak—certainly bleaker than Faure himself prefers to highlight. In some cases, he suggests that diplomats should put off an outright negotiation until they’ve dealt with gaps in trust and cultural communication, or until the conflict feels “ripe” for solution to the parties involved. There’s no point, he suggests, in embarking on a negotiation if all the stakeholders are convinced it’s a waste of time—indeed, a failed negotiation can sometimes exacerbate a problem.

The most promising scenarios occur when both sides are suffering under the status quo, which creates what social scientists call a “mutually hurting stalemate,” with soldiers or civilians dying on both sides, and a “mutually enticing opportunity” if there’s a peace agreement or a prisoner swap. In that case, a decent deal will give both sides a chance to genuinely improve their lot.

Unfortunately for the many whose hopes are riding on negotiations, the truly challenging international problems of our age don’t always come with a strong incentive to compromise. In military conflicts, there is little incentive to resolve matters when a conflict is lopsided in one side’s favor (Shia versus Sunni in Iraq, Israel versus Hamas, the Taliban versus the United States in Afghanistan). The same holds in broader international agreements: They’re complicated and intractable largely because the states involved are—no matter what they say—quite comfortable with the status quo. Think about climate change: The biggest gas-guzzlers and polluters, the ones whose assent matters the most for a carbon-reduction treaty, are often the last states that will pay the price for rising oceans. Meanwhile, the poorer nations whose populations are most at the mercy of sea levels or changing weather have little clout. Just as it’s easy for a relatively secure Israel to stand pat on the Palestinian question, there are few immediate consequences for the United States and China if they sit out climate talks.

It’s not all bleak news. Even in cases where negotiations appear hamstrung—like climate change and Palestine—there are, Faure points out, plenty of other reasons to continue negotiating. Negotiations are a form of diplomacy, dialogue, and recognition, and even in failure can serve some other interests of the parties involved. But—as the impressive historical record of failed international agreements shows—it’s naive to think that they will always yield a solution.

The Crises We’re Ignoring

[Originally published in The Boston Globe.]

During the presidential campaign, two issues often seemed like the only foreign policy topics in the entire world: the Middle East and China. Those are unquestionably important: The wider Middle East contains most of the world’s oil and, currently, much of its conflict; and China is the world’s manufacturing base and America’s primary lender. But there are a host of other issues that are going to demand Washington’s sustained attention over the next four years, and don’t occupy anywhere near the same amount of Americans’ attention.

You could call them the icebergs, largely hidden challenges that lie in wait for the second Obama administration. Like all of us, when it comes to priorities, the people in Washington assume that the thing that comes to mind first must be the most important. The recent crises or tensions with Afghanistan, Benghazi, and China make these feel like the whole story. But in fact they are really just a few chapters, and the ones we’re ignoring completely may actually have the most surprises in store.

If the administration wants to stay ahead of the game, here’s what it will need to spend more of its time and energy dealing with in the coming four years.

Europe’s recovery needs to be managed, and that requires global cooperation and money.

Washington and China, along with the International Monetary Fund and the World Bank, will have to be closely involved, and that won’t happen without American leadership. Though the European crisis has already been a front-burner problem for two years, in the United States it barely cracks the public agenda except as a rhetorical bludgeon: “That guy wants to turn America into Greece!” But Europe’s importance to the global economy, and to America, is staggering: It’s the world’s largest economic bloc, worth $17 trillion, and it’s the US’s largest trading partner. If Europe goes down, we all go down.

Climate change. No politician likes to talk about climate change. It’s depressing news. It’s become highly partisan in this country, and it has no obvious solution even for those who understand the threat. It requires discussion of all kinds of hugely complex, dull-sounding science. When we do talk about it as a political issue, it’s largely as a domestic one: saving energy, dealing with the increasing fury and frequency of storms like Hurricane Sandy, investing in new infrastructure.

In fact, climate change is a massive foreign policy issue as well. On the preventive side, any emissions reduction requires cooperation across borders—between small numbers of powerful nations, like America and China, along with massive worldwide accords like the failed Kyoto Protocol. The responses will often need to be global as well. Rising oceans and temperatures have no regard for national boundaries, and most of the world’s population lives near soon-to-be-vulnerable coastlines. Entire cities might have to move, or be rebuilt, often across

borders. Sandy could cost the American Northeast close to $100 billion when all is said and done (current damage estimates already top $50

billion). Imagine the price of climate-proofing the cities where most of the world lives—Mumbai, Shangahi, Lagos, Alexandria, and so on. Climate change, if unaddressed, could well become an American security issue, propelling unrest and failed states that will spur threats against the US.

Pakistan. Like our tendency to obsess over shark attacks rather than, say, the more significant risk of getting hit by a car, we often find our foreign policy elite preoccupied with rare, dramatic potential threats rather than actual banal ones. You’ll keep hearing about Iran, which might one day have a bomb and which emits noxious rhetoric while supporting well-documented militant groups like Hezbollah. What we really need to hear more and do more about, however, is a regional power that already has nukes (90 to 120 warheads), that is reportedly planning for battlefield bombs that are easier to misplace or steal, and that sponsors rogue terrorist groups that have been regularly killing people in Afghanistan and India for years.

That country is Pakistan. Power there is split among an unstable cast of characters: a dictatorial military, super-empowered Islamic fundamentalists, and a corrupt civilian elite. A significant portion of its huge population has been radicalized, and can easily flit across borders with Iran, Afghanistan, and India. Pakistan isn’t a potential problem; it’s a huge actual problem, a driver of war in Afghanistan, a sponsor of killers of Americans, and perennially, the only actor in the world that actively poses the threat of nuclear war. (The hot war between India and Pakistan in Kargil in 1999 was the first active conflict between two nuclear powers. It’s not talked about much, but remains a genuine nightmare scenario.) Pakistan is also a huge recipient of American aid. We need to find leverage and work to contain, restrain, and stabilize Pakistan.

Transnational crime and drugs. When it comes to violence in the world, foreign-policy thinkers tend to think first about wars, militaries, and diplomacy. But to save money and lives, it would be smarter to think about drugs. In much of the world, the resources spent and lives lost to criminal syndicates in the drug war rival the costs of traditional conflict. Narco-states in the Andes and, increasingly, Central America, make life miserable for their own inhabitants. Criminal off-the-book profits symbiotically feed international crime and terrorism. And in every region of the world, drugs provide the economic engine and financing for militias and terrorist groups; they fuel innumerable security problems, such as human trafficking, illicit weapons sales, piracy, and smuggling. Ultimately, wherever the drug business flourishes, it tends to corrode state authority, leaving vast ungoverned swaths of territory and promoting political violence and weak policing.

The United States pays a lot of attention to this problem in Afghanistan and Mexico, but it’s a drain on resources in corners of the globe that get less attention, from Southeast Asia to Africa. Washington needs to approach the international illegal drug trade like the globalized, multifaceted problem that it is, requiring international law enforcement cooperation but also smart economic solutions to change the market, including legalization.

Mexico. It feels almost painfully obvious, but it’s been a long time since a US president has prioritized our next-door neighbor. Our economies are inextricably linked. America’s supposed problem with illegal immigration is actually the organic

development of a fluid shared labor market across the US-Mexico border. Meanwhile, the distant war in Afghanistan eats up an enormous amount of resources while another conflict races on next door: Mexico’s increasingly violent drug war. Since 2006, it has claimed 50,000 lives, and the violence regularly spills over the border. Washington has collaborated piecemeal with Mexico’s government, but this is a regional conflict, involving criminal syndicates indifferent to jurisdiction. The United States needs to persuade Mexico to pursue a less violent, more sustainable strategy to counter the drug gangs, and then partner with the government there wholeheartedly.

The dangerous Internet. Cyber security might sound like a boondoogle for defense contractors looking for more money to spend on a ginned-up threat. Yet in the last year we’ve seen the real-world consequences of cyber attacks on Iran’s nuclear program, apparently orchestrated by the

United States and Israel, and an effective cyber response apparently by Iran that hobbled Saudi Arabia’s oil industry. Harvard’s Joseph Nye points out that cyber espionage and crime already pose serious transnational threats, and recent developments show how war and terrorism will spill into our online networks, potentially threatening everything from our power supply to our personal data.

The US budget. Elementary economics usually begins with the discussion of guns vs. butter: You can’t pay for everything given limited resources, so do you eat or defend yourself? For generations, America has had the luxury of not really having to choose: The economy has mostly boomed since

World War II, meaning we never had to cut anything fundamentally important. But America now faces a contracting global economy and a world in which it increasingly has to share resources with other rising powers. This is unfamiliar, and unhappy, territory: America’s next defense and foreign affairs budgets will probably be the first since the Second World War to require serious downsizing at a time when there are actual credible threats to the United States.

The Americans who reelected President Obama didn’t care that much about his foreign policy, according to polls. And, perhaps fittingly, Obama dealt with the rest of world during his first term with competence and caution rather than with flair and executive drive. His impressive focus on Al Qaeda hasn’t been mirrored so far in the rest of his national security policy, made by a team better known for its meetings than for setting clear priorities.

In the wake of a decisive reelection, Obama will have the political latitude to shape a more creative and forward-thinking foreign policy in his second term. If he does, he’ll have to work around both deeply divided legislators and a constrained budget: We simply can’t pay for everything, from land wars to cyber threats to sea walls to protected American industries. The priorities the next administration chooses—and its ability to pass any budget—will dramatically shape the kind of foreign influence America yields over the next four years.

The Carter Doctrine: A Middle East strategy past its prime

[From The Boston Globe Ideas section.]

Cops say they figure out a suspect’s intentions by watching his hands, not by listening to what comes out of his mouth. The same goes for American foreign policy. Whatever Washington may be saying about its global priorities, America’s hands tend to be occupied in the Middle East, site of all America’s major wars since Vietnam and the target of most of its foreign aid and diplomatic energy.

How to handle the Middle East has become a major point in the presidential campaign, with President Obama arguing for flexibility, patience, and a long menu of options, and challenger Mitt Romney promising a tougher, more consistent approach backed by open-ended military force.

Lurking behind the debate over tactics and approach, however, is a challenge rarely mentioned. The broad strategy that underlies American policy in the region, the Carter Doctrine, is now more than 30 years old, and in dire need of an overhaul. Issued in 1980 and expanded by presidents from both parties, the Carter doctrine now drives American engagement in a Middle East that looks far different from the region for which it was invented.

President Jimmy Carter confronted another time of great turmoil in the region. The US-supported Shah had fallen in Iran, the Soviets had invaded Afghanistan, and anti-Americanism was flaring, with US embassies attacked and burned. His new doctrine declared a fundamental shift. Because of the importance of oil, security in the Persian Gulf would henceforth be considered a fundamental American interest. The United States committed itself to using any means, including military force, to prevent other powers from establishing hegemony over the Gulf. In the same way that the Truman Doctrine and NATO bound America’s security to Europe’s after World War II, the Carter Doctrine elevated a crowded and contested Middle Eastern shipping lane to nearly the same status as American territory.

In 2012, we look back on a recent level of American engagement with the Middle East never seen before. Even the failures have been failures from which we can learn. The decade that began with the US invasion of Afghanistan and ended with a civil war in Syria holds some transformative lessons, ones that could point the next president toward a new strategy far better suited to what the modern Middle East actually looks like—and to America’s own values.

***

President Carterissued his new doctrine in what would turn out to be his final State of the Union speech in January 1980. America had been shaken by the oil shocks of the 1970s, in which the Arab-dominated OPEC asserted its control, and also by the fall of the tyrannical Mohammad Reza Pahlavi, Shah of Iran, who had been a stalwart security partner to the United States and Israel.

Nearly everyone in America and most Western economies shared Carter’s immediate goal of protecting the free flow of oil. What was significant was the path he chose to accomplish it. Carter asserted that the United States would take direct charge of security in this turbulent part of the world, rather than take the more indirect, diplomatic approach of balancing regional powers against each other and intervening through proxies and allies. It was the doctrine of a micromanager looking to prevent the next crisis.

Carter’s focus on oil unquestionably made sense, and the doctrine proved effective in the short term. Despite more war and instability in the Middle East, America was insulated from oil shocks and able to begin a long period of economic growth, in part predicated on cheap petrochemicals. But in declaring the Gulf region an American priority, it effectively tied us to a single patch of real estate, a shallow waterway the same size as Oregon, even when it was tangential, or at times inimical, to our greater goal of energy security. The result has been an ever-increasing American investment in the security architecture of the Persian Gulf, from putting US flags on foreign tankers during the Iran-Iraq war in the 1980s, to assembling a huge network of bases after Operation Desert Storm in 1991, to the outright regime-building effort of the Iraq War.

In theory, however, none of this is necessary. America doesn’t really need to worry about who controls the Gulf, so long as there’s no threat to the oil supply. What it does need is to maintain relations in the region that are friendly, or friendly enough, and able to survive democratic changes in regime—and to prevent any other power from monopolizing the region.

The Carter Doctrine, and the policies that have grown up to enforce it, are based on a set of assumptions about American power that might never have been wholly accurate. They assume America has relatively little persuasive influence in the region, but a great deal of effective police power: the ability to control major events like regional wars by supporting one side or even intervening directly, and to prevent or trigger regime change.

Our more recent experience in the Middle East has taught us the opposite lesson. It has become painfully clear over the last 10 years that America has little ability to control transformative events or to order governments around. Over the past decade, when America has made demands, governments have resolutely not listened. Israel kept building settlements. Saudi Arabia kept funding jihadis and religious extremists. Despots in Egypt, Syria, Tunisia, and Libya resisted any meaningful reform. Even in Iraq, where America physically toppled one regime and installed another, a costly occupation wasn’t enough to create the Iraqi government that Washington wanted. The long-term outcome was frustratingly beyond America’s control.